Thinking in Columns, Not Tunnels: How ACH Could Sharpen Forensic Science*

Another in a series on FORINT (Forensic Intelligence)

Analysis of Competing Hypotheses

I’ve written and taught about using the methods of the intelligence community (or IC, as they say) in forensic investigations (or any kind, really). In the program I created at University of South Florida, one entire class was on learning how to use these methods and applying them to investigations with physical evidence. Sadly, that approach has not been widely adopted (to my knowledge) but, as with many of the ideas I have had, I’ll keep advocating it, like here in my Substack.1

We like to think we’re impartial in investigations, that we follow the evidence wherever it leads, without prejudice or preconception.2 But anyone who’s actually worked cases knows how quickly an early hunch can set like concrete. One piece of evidence, a partial fingerprint, a DNA hit, a fiber that lines up, and suddenly everything else gets interpreted in its light. It’s not malice; it’s human nature. And it’s one reason wrongful convictions happen. This is why frameworks that can be practically incorporated into any forensic discipline to improve decision quality have been designed. They increase the repeatability, reproducibility, and transparency of forensic scientists* decisions, as well as reduce bias. That’s information in, however; we still need a way to parse, evaluate, and decide on the information we have and report on.

The intelligence community learned this lesson long ago, often the hard way. To counteract those cognitive shortcuts, analysts developed structured analytic techniques (SATs) to make their reasoning explicit, transparent, and open to challenge. One of the most useful for investigations is the Analysis of Competing Hypotheses (ACH). I’ve taught and published on ACH in forensic contexts for years, and it remains one of the most underused tools in criminal investigation.

The Premise: Compete, Don’t Confirm

ACH flips the usual investigative mindset. Rather than picking a “most likely” theory and stacking evidence to support it,3 ACH starts by laying out all plausible, mutually exclusive hypotheses, not just one or two;4 I’d tell my students to come up with 5 or 7. Each piece of significant evidence, regardless of whether it’s a lab result, witness statement, or financial record, is then evaluated against each hypothesis. You’re not looking for the biggest pile of supporting facts; you’re looking for which hypothesis has the fewest inconsistencies with the totality of the evidence, the least refutable hypothesis.

This matters because in science, you can never truly “prove” a hypothesis, you can only fail to disprove it. ACH operationalizes that idea. The aim isn’t to find a narrative that feels right; it’s to survive repeated attempts at refutation.5

Why Forensic Science Needs ACH

Forensic evidence is often treated as an anchor: Reliable, objective, and decisive. But anchoring is a bias trap.6 A DNA result may be rock-solid scientifically, yet the inference that the DNA depositor committed the crime may be wildly wrong. Without a disciplined way to test competing explanations, investigators and scientists risk over-weighting one fact at the expense of the case as a whole.

ACH gives you that discipline. It forces you to:

Record every hypothesis, including ones you think are remote or uncomfortable.

Evaluate each piece of evidence for whether it’s consistent, inconsistent, or not applicable to each hypothesis.

Maintain an audit trail so future reviewers can see not just what you concluded, but why.

The process is especially valuable in cold cases. With no clock ticking down to an arrest, investigators can systematically reframe the file, separate inference from fact, and identify where new forensic techniques could shift the balance.

A Case in Point

David Keatley’s review of the Audrey Marie Hilley case—a homicide misclassified as a natural death—shows the point. Using ACH, the evidence (rumors about workplace contamination, medical diagnoses, family dynamics, financial changes) was laid out against competing scenarios: Homicide at home, accidental poisoning at work, natural causes. The resulting matrix didn’t deliver a dramatic “gotcha” moment. What it did was reveal which explanations the evidence quietly undermined, and which withstood that scrutiny. In Hilley’s case, the “home” hypotheses fared better than the “workplace” ones, and the accidental scenarios crumbled faster than the homicidal ones. ACH didn’t solve the case; it structured the thinking that could have kept investigators from shelving the right lead too soon.

Let’s look at a teaching example using a fictitious case.

ACH in Action: The Case of the “Library Fire”

Scenario:

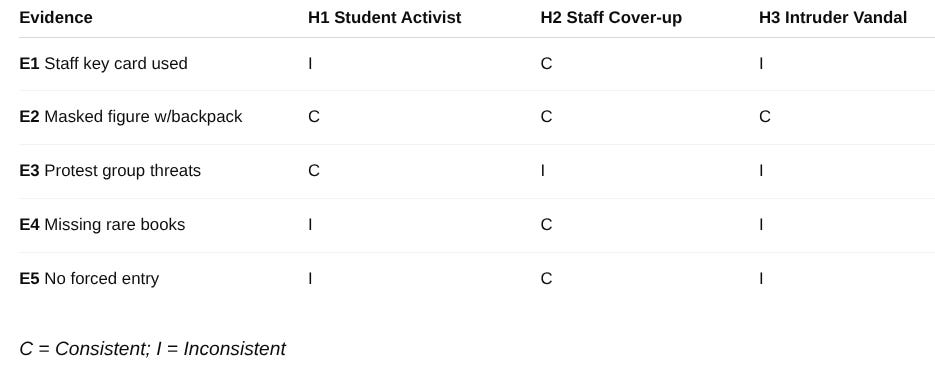

A late-night fire destroys a university library archive. The fire marshal confirms it was arson. Investigators have three plausible hypotheses:

H1: A student activist set the fire in protest.

H2: A staff member burned it to cover theft.

H3: An unrelated intruder set the fire for vandalism.

Step 1 – List the evidence

Significant pieces that could support or refute each hypothesis:

E1: CCTV shows a staff key card used to enter minutes before the fire.

E2: Witness saw a masked figure carrying a backpack leaving the scene.

E3: Recent threats on social media from a student protest group.

E4: Missing rare books from the archive’s special collection.

E5: No signs of forced entry.

Step 2 – Build the ACH matrix7

Step 3 – Count inconsistencies

H1: 3 inconsistencies

H2: 0 inconsistencies

H3: 4 inconsistencies

Step 4 – Interpret cautiously

H2 (staff cover-up) has no inconsistent evidence here, which makes it the strongest surviving hypothesis given the current facts. That doesn’t “prove” it’s correct but it makes it the most testable next step. Investigators could now focus on verifying staff alibis, card access logs, and inventory records.

Why ACH works:

Even in this stripped-down example, ACH forces you to test every piece of evidence against every plausible explanation. The staff cover-up hypothesis didn’t “win” because it sounded likely; it survived because the other hypotheses stumbled over the available facts.

The Real Power: Making the Invisible Visible

Biases in investigation aren’t rare; they’re routine. They start with implicit assumptions, get reinforced by selective evidence gathering, and harden into (wrongful) conviction. You can’t simply will yourself unbiased8, any more than you can will yourself to see both lines in an optical illusion as equal length.9 But you can design procedures that drag your reasoning out into daylight, where it can be challenged.

That’s the quiet magic of ACH. It turns “I think” into “Here’s how I—and you—know what I think.” It creates a living map of the investigative landscape, one you can revisit, revise, and critically defend. It’s not a cure-all; it won’t replace interviews, lab work, or shoe leather. But it will help you resist the tunnel vision that turns plausible leads into dead ends. And in forensic science*, where the stakes are measured in years of liberty and trust in justice10, that’s a habit worth cultivating.

At least it keeps me off the streets. Kinda.

No, really, we do. If we didn’t think we were right and good people, we’d never get out of bed in the morning. That existential hubris bleeds over into nearly every decision we make, for good or bad. In fact, you can’t make a decision without emotion, Mr. Spock notwithstanding. Objective, schmujective.

This is actually a combination of “cherry picking,” “confirmation bias,” and “availability bias.” Cherry picking (aka, fallacy of incomplete evidence) is intentionally choosing data that confirm your position while ignoring other contradictory information. Confirmation bias is our tendency, usually operating below the level of awareness, to give more weight to information that supports what we already think is true. We notice and remember the facts that fit our preferred explanation, and we discount or overlook those that don’t. Availability bias is when we rely on readily available information or examples that come to mind easily when making judgments or decisions.

Like “guilty” and “not guilty” (ahem). When we starting our reasoning process with, “Well, he either did or didn’t do it,” we’re already on a slippery slope. It’s very much akin to, “If he’s not guilty of this crime, he’s guilty of something—he’s a ‘bad guy’.” Formal reasoning processes in forensic science* used to start with this premise (guilty/not guilty), which is why I’m not a fan. I sat through one early Bayes workshop (the ubiquitous ski mask, or balaklava, example) where the instructor said, “We have the evidence from the scene. Let’s ask the police if they have any other information we can use to inform our decisions” (see previous footnote). One French officer of the Gendarmerie, in full uniform, raised his hand and asked in heavily-accented English, “But, zee poleece. What if zey are stoopeed?” I think about that moment a lot.

This is fighting against “proof by assertion,” where something is repeatedly restated despite being contradicted or refuted. Repetition is persuasive, which you already know because you’re bombarded with inane, infuriating, and repetitious messages no matter where you go or what you listen to, except possibly books. I love books.

Anchoring is a cognitive bias where some bit of information becomes a magnet for all other thinking about that topic. If I say something costs $10, that now becomes a norm; if you find the same thing for $8, you think, “It’s on sale!”; conversely, $15 now sounds way to expensive and you’ll probably pass on buying it. Anchoring doesn’t even have to be relevant, which is a really scary thing. If I say, “Write down the last two digits of your zip code,” then ask you, “Is this bottle of wine expensive at $35?,” your answer will be influenced as to whether you live in Buffalo (14209) or Los Angeles (90043). This is a known thing, at least by those who want to sell us stuff. I could go on but you should just read Cialdini’s book.

“We’ve had bias training, so we’re good.” Yeah. Right. Admitting you have a problem is the first step, dummkopf.

And dollars wasted in resources and spent in reparations: The average cost of a wrongful conviction is estimated to be $6.1 million. States have paid out billions of dollars (ours, by the way) in compensation to those wrongfully convicted. I think a piece of paper, a pencil, and an open mind are way cheaper. But that’s just me; I’m simple that way.